Mar. 10th, 2020

An Introduction to Integer Complexity

Mar. 10th, 2020 09:04 amThere are a lot of times people talk about famous open mathematical problems. But less attention is paid to some of the problems that are both simple to state and aren't very famous at all. Let's talk about one of those not so famous but still simple open problems.

We're interested in the minimum number of 1s needed to write a positive integer n as a product or sum of 1s, using any number of parentheses, and we'll call that ||n||. For example, the equation 6=(1+1)(1+1+1) shows that ||6|| is at most 5, since it took 5 1s. A little playing around will convince you that you cannot write 6 using just four or fewer 1s, so it is really is the case that ||6||=5. We call ||n|| the integer complexity of n.

Now here's the problem: Does every power of 2 have a most efficient representation the obvious way? That is, is it is always true f(2^k)= 2k? For example, 8=(1+1)(1+1)(1+1) and in fact ||8||=6. Similarly, 16 = (1+1)(1+1)(1+1)(1+1), and ||16||=8. (Note that we sometimes have representations which are tied for writing it this way; for example we can also write 8 = (1+1+1+1)(1+1) but that also takes 6 ones, not fewer).

One might think that the answer should be obviously yes, so let's look for a moment at powers of 5. Does every power of 5 have the obvious representation as best? We'd expect from ||5||=5, to get that ||25||=10, and we do. The pattern continues with ||5^3||=15, and ||5^4||=20, and ||5^5||=25, but then it breaks down, ||5^6||=29. This in some sense happens because 5^6-1 has a lot of small prime factors. But it isn't obvious that something similar couldn't happen with powers of 2. This problem was as far as I'm aware, first proposed by Richard Guy. This is a problem which is dear to me and one which I'm responsible for some of the progress on with Harry Altman who has written a lot about this problem on his Dreamwidth discussing his published works on the problem.

My intuition on this problem keeps jumping back and forth on whether it is true or not. Right now, I'm leaning towards it being false.

I've mentioned before that there are a lot of simple to state but not so incredibly famous open problems. I want to talk about two thematically related problems, Lehmer's Totient Problem, and Carmichael's Conjecture. These problems are well known to number theorists but don't have the same general fame as say Goldbach's Conjecture or the existence of odd perfect numbers.

Recall two numbers are relatively prime when their greatest common divisor is 1. For example, 15 and 8 are relatively prime. But 21 and 15 are not relatively prime because their GCD is 3. We'll define phi(n) to be the number of positive integers which are less than or equal to n and relatively prime to n. This function is known as the Euler phi function. For example, phi(9) =6 because under 9 we have 1,2,4,5,7 and 8 as numbers which are relatively prime to 9. Note that if p is a prime, then phi(p)=p-1. For example, phi(5) =4 since the numbers which are less than or equal to 5 and relatively prime to 5 are everybody: 1,2,3 and 4. (If you haven't seen the phi function before, it might be worth playing around with. Can you find phi(20)? What is phi of a power of 2? What is phi of a prime power in general?)

For a given positive integer a and another integer n we want to understand how big the smallest positive integer k is so that a^k leaves a remainder of 1 when we divide by n. For example, if a =2 and n =5, then one may take k=4, since 2^4 = 16 which leaves a remainder of 1 when we divide by 5. Similarly, if a=4 and n=7, then k = 3, since 4^3 = 64 which leaves a remainder of 1 when divided by 7.

Such a k doesn't always exist for a given n. If a and n share a common factor greater than 1, then so will a^k and n, and so the remainder of a^k when divided by n will also share the common factor, and so the remainder cannot be 1. For example, let's look at powers of 2 and their remainder when we divide by 10. We have 2,4, 8, 16, 32,64, 128, 256. When we divide by 10, we get as remainders 2,4,8, 6, 2,4,8, 6 ... And it keeps repeating this way.

But maybe there always is such a k as long as a and n are relatively prime. This turns out to be the case.

The first step towards proving this was due to Fermat. He proved what is now often called "Fermat's Little Theorem" (to contrast it with "Fermat's Last Theorem" but confusingly also abbreviated as FLT) which was that if p is prime, and a is not divisible by p (hence they must be relatively prime) then a^(p-1) leaves a remainder of 1 when one divides by p. So when n=p, we can in this situation always take p-1. The number p-1 may remind you of something, which we had earlier. When p was prime we had phi(p)=p-1. (A good exercise is to verify this works with p=7. That is, check that a^6 always leaves a remainder o 1 when you divide by 7 for a=1,2,3,4,5 or 6.)

And this is in fact the proper generalization! Euler proved a much stronger result: Recall we defined phi(n) be the number of positive integers which are less than or equal to n, and which share no common factor greater than 1 with n. Then Euler proved that if a and n share no common factor, then aphi(n) leaves a remainder of 1 when I divide by n. So, for k we care about is always at most phi(n). One might be interested in given an a and n what is the least value of k, and that's a delicate question that my own PhD work touched on. But that's a separate question. Phi(n) also shows up in some practical cryptographic applications, but that's not our emphasis today.

D. H. Lehmer in the 1930s was interested in how if p is a prime, then phi(p)=p-1. Lehmer noted that if n is composite then obviously phi(n) has to be strictly smaller than n-1, but maybe it fits in neatly to n-1. That is, maybe there is a composite number where phi(n) divides n-1? Lehmer conjectured that this was not the case. We don't know, but we do know that if there is such a number it needs to be pretty big (greater than 10^22 ), and it needs to have at least 15 distinct prime factors. If someone has the time and effort with some decent amount of computing power, they could likely improve this substantially. The technique used to obtain that bound is a little more clever than just checking every number under 10^22, but it doesn't involve deeply subtle number theory. My suspicion is that someone who is a good algorithmist and with some access to decent computing power should be able to improve that bound.

The other conjecture worth talking about in this context is Carmichael's Conjecture. Carmichael noticed that some numbers never arise as the value of phi(n). For example, phi(n) is always even if n >2. To see this note that if b is relatively prime to n, then so n-b, so after 2, numbers relatively prime to n and less than n always come in pairs. So any odd number other than 1 is never the value of the phi function. With a bit more work one can show that some other numbers don't arise either. For example, phi(x)=14 has no solutions.

But, Carmichael noticed that when there was a solution to phi(x)=k, there always seemed to be another y such that x was not equal to y and phi(y)=k. For example, we saw earlier that phi(5)=4. But phi(8) =4 also, since 1, 3,5 and 7 are the numbers which are less than or equal to 8 and relatively prime to 8. Carmichael conjectured that this was always true. That is, if phi(x)=k had a solution, then there was always more than one solution. Another similar example is how we saw that phi(9)=6, but it is also the case that phi(7)=6. (A good exercise: There are two other m such that phi(m)=6. Can you find them?). In this case, we also have very large bounds. In particular, we know that any counterexample to Carmichael's conjecture must be greater than 10^(10^10). That's pretty big.

I mentioned Lehmer's Conjecture in an earlier post. Recently, someone asked me how one goes about proving something like the sort of result that a couunterexample needs to have a lot of distinct prime factors. I wrote an answer to them, and this is a version of that answer which may be of interest to people who read the earlier post. This post is slightly more technical than most of the other math posts currently here.

The primary technique used is the following. In what follows, I'll write capital N for a counterexample to Lehmer's conjecture, and use n for a generic integer where we aren't necessarily making that assumption. I'll implicitly assume that you've seen the Euler phi function a bit before. If not, the Wikipedia article is a good place to start . But the main takeaway we need about it is that if I have n=p^a for some prime p, then I can write phi(n) = (p-1)p^(a-1), I can multiply together the phi values at each highest prime power which divide n. For example, if n = 360, 180 =2^3 * 3^2 * 5 I can write phi(360)= phi(2^3) phi(3^2) phi(5) = (2-1)2^2 * (3-1)3^1 * (5-1) = 4*2*3*4=96. This formula will come in handy below. We won't need any other properties beyond what was mentioned in the previous post about Lehmer's Conjecture.

In the below, we'll write a|b to mean that a is a divisor of b. For example, 3|6 is true but 4|6 is false. We'll need two preliminary remarks:

First, note that any such N must be odd. To see this, note that since phi(n) is even for n>2, if N is a counterexample, then N must be odd, since if N were even, N-1 would not be divisible by phi(N).

Second, note that any such N must be squarefree (that is not divisible by the square of a prime). To see this, note that from the standard formula for phi(n), if p^2 |n, then p|phi(n).

So if N is divisible by p^2 for some prime p, then since p|phi(n), we'll have p|N-1 and p|N which is impossible.

We'll define H(n) as the product of p/(p-1) over all primes which divide n. For example, H(60)= (2/1)(3/2)(5/4) = 15/4.

Important things to note about H(n). First, H(n) is multiplicative; that H(ab)= H(a)H(b) whenever a and b have greatest common divisor 1. Second, since x/(x-1) is a decreasing function, so if we replace a prime in the factorization of n with a larger prime, the value of H goes down. For example, H(3) > H(5) and H(20) > H(45).

Notice that H(n) = n/phi(n). Now, if phi(N)|N-1 for some composite N, then k phi(N) = n-1 for some k >1, and therefore we have H(n) = n/phi(n) > (n-1)/phi(n) = k. In particular, for any such N one must have H(N) > 2.

From this one immediately has that any such N has to have at least three distinct prime factors. To see this, note that H(15) = (3/2)(5/4) = 15/8 < 2, and again replacing any prime with a larger prime would result in H being even smaller.

To give a flavor of the general method, let's sketch a proof that N needs to have at least five distinct prime factors.

One Lemma that will be helpful first:

Lemma 1: If N is a counterexample to Lehmer's conjecture and p|N for some prime p, and q|N for some prime q then we cannot have p|q-1.

Proof: Assume we had such, then by the formula for phi(n) we'd have p-1|N-1 and hence q|N-1 since q|p-1. But we cannot q|N and q|n-1.

(For example, this Lemma shows that we cannot have both 3|N and 7|N. Very handy little bit.)

Theorem: There is no such N with three distinct prime factors.

Proof: Assume that N is such an N. Note that (5/4)(7/6)(11/10) < 2. So our smallest prime factor must be 3. So 7 does not divide N by our Lemma. Similarly, since (3/2)(11/10)(13/12)< 2, our second largest prime factor must be 5, and thus by our Lemma our third prime factor cannot be 11. It also cannot be 13 by our Lemma. We now need a prime p (3/2)(5/4)(p/(p-1) > 2. But solving this inequality one will find that one needs p<17, so there are no primes which remain to check.

Now we'll sketch the same technique for the slightly harder case of four distinct prime factors. But the above shows most of the central ideas. Below will be essentially just an elaboration of the same technique.

Theorem: No such N has four distinct prime factors.

Proof:We'll break this down into two cases, based on whether 3 is a prime factor of N.

Case I:Assume 3|N. By our Lemma, we cannot have 7, 13 or 19 as divisors of N. Now, we'll break this down into two subcases based on whether 5|N.

Case Ia: 5|N. In that case, by our Lemma we have that 11 does not divide N. Let's break this down slightly further. Note that if the second largest prime factor of N is 29 then since (3/2)(5/4)(29/28)(47/46)< 2 we have a contradiction. (Exercise: Why was I able to get away with skipping 31, 37, 40 and 43 as possible prime divisors?). So we have some subcases where the third prime factor is 17, 23 or 29. If the third prime factor is 17, then we must have for the fourth prime factor p, that (3/2)(5/4)(17/16)(p/(p-1)) > 2. Solving for this, one will find that one must have p <= 257. Now, checking all primes which are at most 257 will find that none of them work. Similarly, one can do the same thing if the third prime is 23 or 29. (If one is lazy and is doing this with a computer one doesn't even need to calculate the precise largest bound for when (3/2)(5/4)(23/22)(p/(p-1)) > 2, since we know it must be no higher than 257. The same remark applies to 29.)

Case Ib, where 5 is not a divisor of N. Then we have (3/2)(11/10)(17/16)(23/22) < 2 so we're done. (Note that if this hadn't been less than 2 we could have used that we can't actually have both 11|N and 23|N so we could have broken this down further but we didn't need to).

Case II: We've assumed that 3 doesn't divide N. Note that (5/4)(7/6)(11/10)(13/12) < 2, so we're done. (Again note that if this had been too large we could have broken down into subcases depending on whether 5 divides N and then thrown 11 out in one of them.)

That's the basic technique. In practice, one wants to do as much of this as one can via computers and there are a few other ideas and tweaks which one can do to save on case checking.

Richard Guy, 103

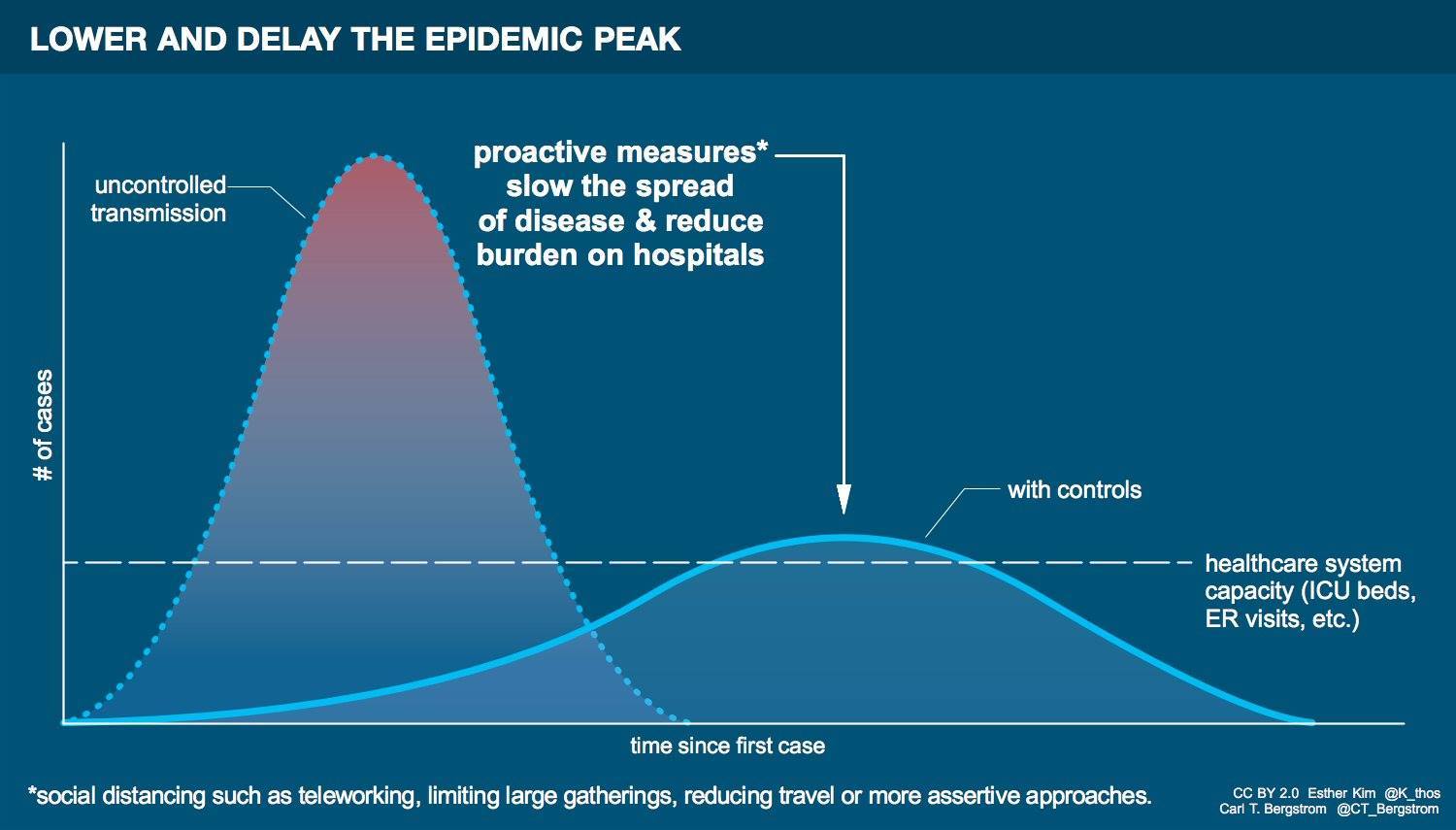

Mar. 10th, 2020 09:26 amThe most common basic model of disease spread is the SIR model , and it turns out that simple versions of this model work pretty well empirically for many diseases. The basic idea of the model is that one has three compartments representing three types of people, people who are susceptible to the disease but are uninfected, people who are infected, and people who have recovered. People move from the susceptible to the infected category based on the number of people infected, with some parameter representing how likely an infected person is to infect new people. People move from the infected to the recovered population at some fixed probability. Strictly speaking "recovered" for our purposes also includes people who died and thus aren't spreading the disease but that distinction doesn't matter much for this sort of model. The basic model assumes that once one is in the recovered category one is either dead or immune.

Right now one of the biggest issues is simply how many unknowns there are. It could turn out that all three of these issues above aren't that severe. Or it could turn out that all three are substantially in play. We don't completely know at this point, and have a worrying amount of evidence that all three of these issues are cropping up.